Unit 3 - Sampling in qualitative research

3.1. Welcome to the Unit

Welcome to Unit 3. In this unit, we explore decisions about who, and how many people, to include in qualitative studies. We will look specifically at non-probability sampling, how to determine sample size and debates about data saturation.Unit Content

→ Non-probability sampling

→ Determining sample sizes

→ Data saturation

3.2. How do I choose a sample?

Just like quantitative research, choosing a qualitative sample must be both systematic and rational.1 Sampling is complex and requires pre-planning so that the sample is appropriate (i.e. fits the aim of the research) and adequate (i.e. generates adequate, rich and sufficient data).1 While random and stratified samples can be used in qualitative studies, it is more likely that a non-probability sampling is used. The most common form of non-probability sampling is a ‘purposive sampling’ technique which is used to carefully select information-rich cases to address the research aim (NB: the terms ‘Purposive’, ‘purposeful’ and ‘criterion-based’ tend to be used interchangeably).

The logic and power of purposeful sampling lies in selecting information-rich cases for study in depth. Information-rich cases are those from which one can learn a great deal about issues of central importance to the purpose of the inquiry, thus the term purposeful sampling. 2 (p.230)

In qualitative studies, participants are usually selected because they are deemed to be ‘information rich’. As such they will have an in-depth understanding of the phenomenon under investigation.3 Sampling is therefore deliberate and non-random.

Researchers will know the characteristics of these ‘information rich participants’ and will list these as inclusion and exclusion criteria and these should be made transparent. In some studies the researchers will want a relatively homogeneous sample and in others a heterogeneous sample is favoured. Flick4 warns that a sample that is too homogeneous may stifle meaningful comparison, whereas it may be difficult to find areas of commonality if a sample is too heterogeneous, so a careful balance is needed. Researchers should weigh up the benefits and limitations of their decisions about who to include in the study and evaluate the impact of these decisions on their findings.

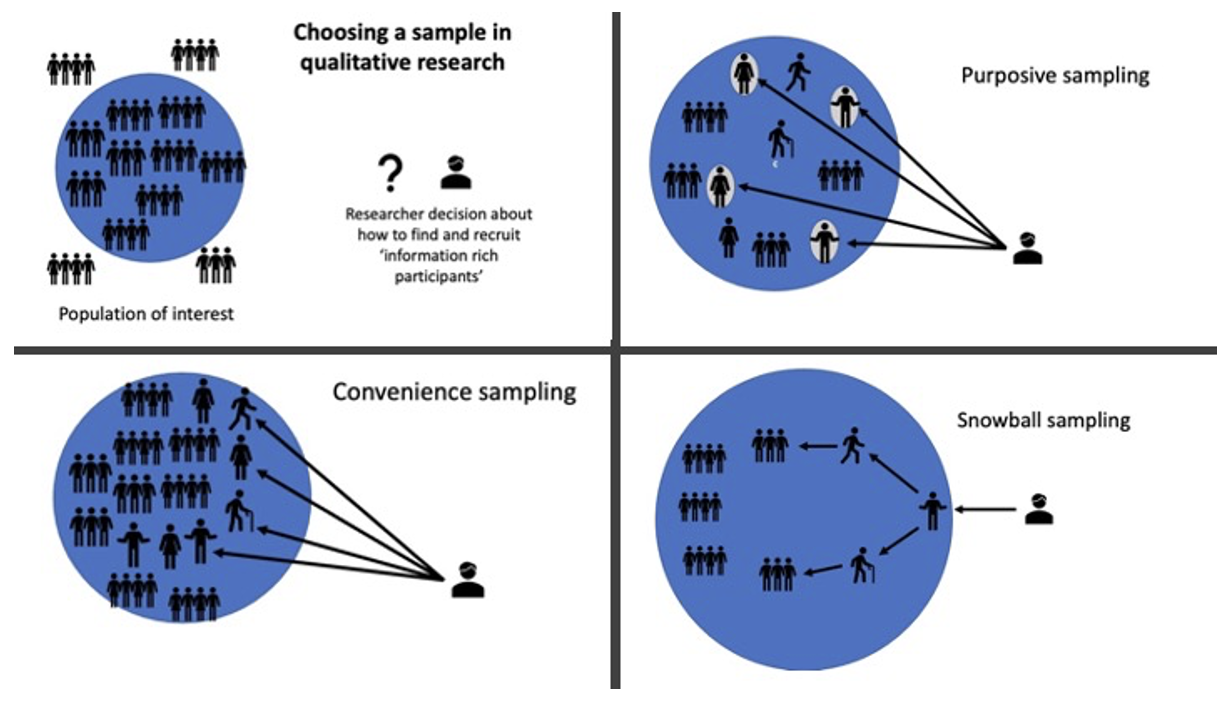

Figure 3.1 illustrates three popular forms of non-probability sampling; however, there are others and Table 3.1 explains a little more about these approaches.

Figure 3.1: Qualitative approaches to sampling

Figure 3.1: Qualitative approaches to sampling

| Sampling approach | Definition |

|---|---|

| Purposive/purposeful/criterion based | Selection of participants based on a priori criterion. |

| Convenience/opportunistic | Participants are easily accessible to the researcher. |

| Snowball/chain | Asking interviewees to identify others relevant to the aims of the study. |

| Typical | To illustrate what is ‘normal’ or average . |

| Unique | Atypical presentation, rare or unusual presentation. |

| Maximum variation | To document unique variations and shared patterns despite heterogeneity. |

| Theoretical | Characteristics of the sample evolve during data collection and analysis. Typically used in a specific qualitative research approach called grounded theory. |

Table 3.1: Types of non-random sampling

In most of the approaches to sampling described in Table 3.1 the characteristics of the sample are known before the researchers recruit their participants. In contrast, theoretical sampling is used when all the preferred sample characteristics are not known a priori. Researchers may start with a criterion-based sample and then make progressive sampling decisions during data collection and analysis, as characteristics of interest evolve. These evolving decisions, regarding who should be interviewed next, support the researcher to develop and test their emergent theory – this is commonly associated with grounded theory methodology.5

Key Points

How do I choose a sample?→ Samples in qualitative research are usually non-probability i.e. purposive

→ Samples must address the research aim and generate enough data to be deemed sufficient to answer the research question.

→ There are a range of non-probability sampling techniques including, purposive, typical, convenience, snowball and maximum variation.

→ In samples which are too heterogeneous it may be difficult to find areas of commonality.

→ Samples which are too homogeneous may not facilitate meaningful comparison.

3.3 - How many people should be in a qualitative sample?

Deciding how many participants to include in a qualitative study is an important step. While a large sample size may be appealing, it is more typical for qualitative researchers to limit themselves to a small sample ranging from the single case study to samples of between 4 and 40.1While this may not seem a large enough sample for those more familiar with quantitative studies, these smaller samples facilitate the in-depth and in-detail analysis that is often required for qualitative studies.When thinking about sample size, it is worth bearing in mind that the aim of qualitative research is not statistical generalisation. Rather, the aim of qualitative research is to find out what can be learnt about the field of interest from a specific case or cases, which can then be transferred from those cases to others. In this sense, the aim of qualitative research is ‘transferability’. Therefore the context and selection of participants plays an important role in the final ‘fittingness’ between the data source and the target population.6

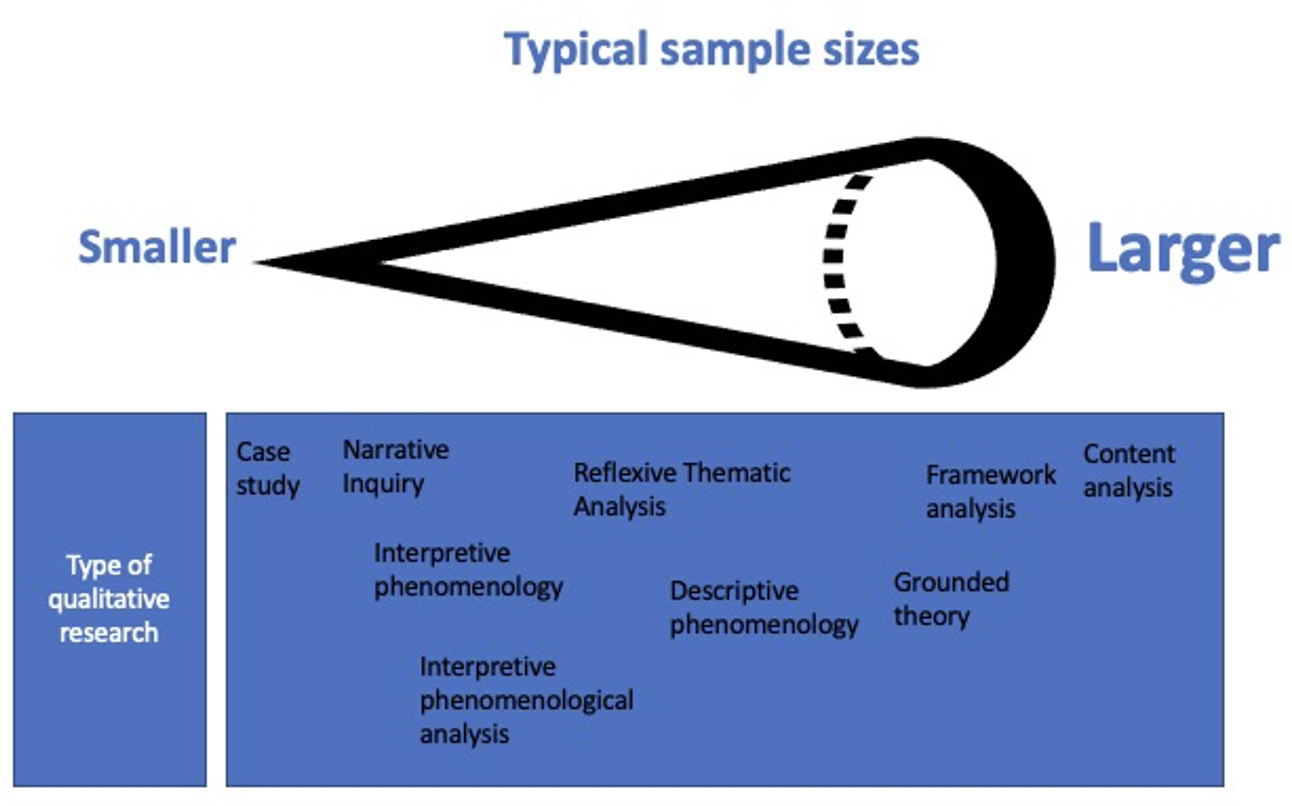

While it may be misleading to cite typical sample sizes, it is useful to illustrate how research designs differ in their approach to sample size e.g. which most commonly rely on smaller samples and which can accommodate larger samples. Typically research designs requiring a more in-depth interpretive analysis of individual experiences will recruit less people, those which aim to take a more general or descriptive approach to the analysis often recruit more. In case-study methodology, for example, the sample may be as small as a sample of one, but it may include multiple datasets for an extremely in-depth analysis of this one case. In contrast, a content or framework analysis conducted by a team of researchers may support a much larger sample maybe 50 + as the focus is largely on frequency of data items rather than in-depth interpretation. Figure 3.2 attempts to illustrate the differences in sample sizes between common qualitative designs rather than provide sample size parameters.

Figure 3.2: Common qualitative designs and sample sizes

In truth there really are no set sample sizes in qualitative research. For novice researchers this creates a problem in how to know they have recruited enough people to be confident of their findings. Holloway and Galvin1 suggest that even a sample of one can yield useful results. However, medical journals, funders and possibly even examiners do seem to have a preference for larger sample sizes, even in qualitative research. Despite this preference, it is important to state that sample size is not a determinant of quality or importance.1 Studies which do commit to larger sample sizes must be adequately resourced to ensure analysis can be completed in the required depth and complexity of the analytical technique proposed.

The lack of explicit guidance has led qualitative researchers to use a concept called ‘data saturation’ to justify their sample size. This concept is discussed further below.

Key Points

How big should the sample size be?→ There are no sample size calculators for qualitative studies.

→ Qualitative studies do not usually aim for generalisation; instead they aim for transferability, therefore sample sizes can be small.

→ Typical samples range from 4 to 40, but this is highly variable and study specific.

→ Sample size must be balanced with data points, data types, methodological/analytical approach and resources available.

3.4 - What is data saturation?

Data saturation is a frequently used term in qualitative research often used to justify the researcher’s sample size. Data saturation is defined as:“Data saturation is reached when no new analytical information arises anymore, and the study provides maximum information on the phenomenon.” 7

In this definition there are two distinct points:

- the first is the point beyond which no new analytical information arises

- the second is the point at which the maximum amount of information on the topic of interest is provided.8

3.5 - Why use data saturation?

Given the lack of definitive guidance for sample sizes in qualitative research, qualitative researchers have often struggled to defend their decision about how many people to recruit to their study. In quantitative studies the size of the sample is key to generalisability. Bigger is usually better as the researchers strive for a representative sample. In comparative studies like RCTs a power calculation will determine how many participants are required to avoid a type II error.9 Objectivity and clarity in sample size estimation is persuasive and gives both the researcher and the consumer of the research confidence in the likelihood of the results being true or if they occurred by chance.9 When qualitative studies are examined from this position the lack of certainty about sample sizes in qualitative research creates a problem for those who want to feel similarly confident about the results.Consequently, qualitative researchers have felt the need to legitimise their small sample sizes and data saturation has become a popular means of doing this. Researchers claiming, they have reached the point of data saturation is a signal that they reached a point during analysis that nothing new was found despite new data being analysed.

Some authors are very clear that data saturation is the gold standard in qualitative research and for qualitative research to be credible data saturation must be reached in the analysis. Appraisal tools such as CASP10 and the COREQ11 checklist for reporting qualitative research also ask if data saturation was reached meaning those publishing qualitative manuscripts are normally required to include a discussion/statement on data saturation.

3.6 - Using data saturation to justify sample sizes

Given the persuasive influence of data saturation there has been somewhat of a quest to determine how big a sample is needed to achieve data saturation. Afterall such parameters would be very helpful when designing studies and defending the application of study findings to people outside of the study sample. Therefore, in an attempt to provide such guidance for data saturation Hennink et al.8 asked ‘how many interviews are enough?’. This study demonstrated that the point at which no new information arises occurred in as little as nine interviews.8 Similarly Guest and Bunce12 found data saturation occurred after 12 interviews. However, Hennink, et al.8 also found a larger sample was required to develop a meaningful and rich interpretation sensitive to contextual and conceptual issues. Reaching a point at which the ‘maximum amount of information on the topic of interest is provided’ was far harder and required 16-24 interviews.Therefore, if you are looking to justify a sample size using principles of data saturation these parameters provide a useful guide. However, there is now considerable debate about the indiscriminate adoption of data saturation across all qualitative research. To understand why this is being questioned we need to examine its methodological origins.

3.7 - Origins of data saturation

The term data saturation originates from the term ‘theoretical saturation’ that is a key component of the methodology grounded theory. In Glaser and Strauss’13 original version of grounded theory theoretical saturation is defined as the point at which new data add nothing new to the developing concept or theory. At this point the researcher can be confident they have found ‘the truth’. However, theoretical saturation is the product of specific approaches to sampling and analysis (i.e. theoretical sampling and constant comparison). Without these principal methods theoretical saturation is hard to achieve or evidence. Therefore, the term theoretical saturation evolved to the more familiar terms data saturation and also ‘thematic saturation’ representing a point at which new data do not change the themes identified during analysis.3.7 - The problem with data saturation

The necessity for small samples and interpretive approaches in many qualitative studies means that qualitative researchers rarely position their research findings as most likely true for everyone else or that their findings are the only way to make sense of the data set. From this position, data saturation is problematic because it makes a statement that there is nothing new that can come from the data. Concern has also been expressed about overuse of the term data saturation without an accompanying explanation about what this means within the context of the study.14-16 Key concerns relating to data saturation include:

- Methodological incompatibility

Taking a technique from one methodology and applying it to another can be problematic. For example, grounded theory assumes that a single ‘truth’ is possible, hence the ability to construct unifying theory, but this not something all qualitative researchers subscribe to. Furthermore the theoretical saturation in grounded theory can only be reached because of its accompanying techniques of theoretical sampling and constant comparison. These techniques are not often used in other research designs. - Indiscriminate use

Data saturation has become a convenient term to add to a manuscript in place of a more in-depth review of why researchers felt their sample size was adequate to address the research question. - Treated like a definitive test

Unlike, power calculations, data saturation is not a definitive test that can estimate or prove that the sample is adequate. 17 However, it is often treated as if it is. - A priori application

Technically, data saturation is a point within the analytical process and not something that can be predicted with ease. In grounded theory recruitment continues alongside data analysis so that when theoretical saturation is reached recruitment will cease. However, in many other qualitative studies a sample size is identified a priori, participants are recruited within the parameters set, data are collected, and analysis conducted in a linear fashion. Therefore, a priori sample size estimation is ‘essentially guesswork’ 18(p.45) but statements such as ‘sample size will be determined by data saturation’ sound more convincing than ‘sample size will be determined by our best guess at what feels sufficient to answer the research question’ despite the latter probably being a more accurate representation of sample size estimation.

3.9 - Determining sample size.

There are two ways to determine sample size: 1) Before data collection; 2) During data analysis.Before data collection

When writing a qualitative protocol, you will need to estimate the sample size to obtain ethical approval for the study. At this point this is your ‘best guess’ at what a sufficient sample size may be. Considerations that need to be factored into sample size estimation prior to data collection are:

- What sample sizes have comparable studies in the literature used?

- What does the theoretical literature say about sample sizes for your chosen methodology/analytical technique?

- How long do you have to complete the analysis?

- Are there distinct groups of people you want to compare within the analysis?

- Is it the individuals’ experience or experiences across individuals that you are most interested in?

When you know the answers to these questions you can provide the approval board/ethics committees some parameters for your sample size.

During data analysis

Qualitative researchers can either recruit all their participants, collect all their data and then proceed to analysis in a linear fashion. In these studies, the a priori sample size chosen for approval is the final sample size for the study.

Alternatively, qualitative researchers may commence analysis alongside data collection. This way the researchers can be responsive to what they are finding in the data and adjust study methods if necessary. In these studies researchers may reach a point when they feel they have collected enough data because they are no longer making new discoveries during data analysis. In these studies, the a priori sample size is a guide, and the final sample size is determined when they chose to stop recruiting. This intuitive feeling about when to stop analysing the data will be referred to by many as having reached data saturation. However, there are other related terms that may be more appropriate.

3.10 - If not data saturation what else?

There is a lot of discussion in the literature about what can, and should, replace the term data saturation in some qualitative studies.Lincoln and Guba19 use the term ‘information redundancy’ meaning that no new information, codes or themes evolved during the analysis. Whereas Dey20 prefers the term ‘sufficiency’ as an alternative to saturation as an expression of ‘good enough’ rather than ‘exhaustive’.18 Finally, Malterud21 introduced the concept of ‘Information power’ which is dependant on five key dimensions: Study aims; sample specificity; established theory; quality of dialogue; analysis strategy. However, information power, is also not without its critics, e.g. Sim et al.22 who stated:

“the notion of ‘information power’ appears, albeit implicitly, to adopt a realist assumption that data are somehow extracted from participants, suggesting the incremental gaining of objective information – an epistemological stance we have proposed is at odds with approaches that consider themes as being developed as part of an ongoing interpretive analysis”22(p.629-630)

It is clear then that justification of sample size is contentious and there is no simple term to represents what is an intuitive and highly subjective decision. It is therefore argued that no term should be used in a mechanistic and thoughtless way. Instead, there should be more explicit explanation of how decisions were made and transparency about why researchers felt comfortable to stop the analysis when they did. This discussion should include why the sample is considered to be…

- Adequate – so that findings are transferable to other contexts.

- Appropriate – to answer the research question.

- Aligned – with the research question and methodological orientation.18

Key Points

What is data saturation?→ Data saturation is both an overused term and a poorly described process in medical literature.

→ Data saturation includes both saturation of information from the sample and saturation of meaning relevant to the target population.

→ During protocol development, researchers should estimate a sample size based on where they think saturation may occur; and during data analysis, they should evaluate if data saturation was indeed reached.

3.11 - Unit summary

In this unit we have explored how to determine a sample size for a qualitative study. We have explained the power of small samples and that larger samples are not always preferable within this context. We have examined the concept of data saturation and presented some of the more contemporary debates about why this may not always be the appropriate way to determine or justify the final sample in some qualitative studies. Finally, we introduced other means of justifying sample sizes that may be more appropriate. In the next unit we describe how to enact the most common data collection method in qualitative inquiry, the semi-structured interview.References

1. Holloway I, Galvin K. Qualitative Research in Nursing and Healthcare. 4th edition. John Wiley & Sons Inc.; 2017.

2. Patton MQ, Patton MQ. Qualitative Research and Evaluation Methods. 3rd ed. Sage Publications; 2002.

3. Palinkas LA, Horwitz SM, Green CA, Wisdom JP, Duan N, Hoagwood K. Purposeful Sampling for Qualitative Data Collection and Analysis in Mixed Method Implementation Research. Adm Policy Ment Health. 2015;42(5):533-544. doi:10.1007/s10488-013-0528-y

4. Flick U. Designing Qualitative Research. SAGE Publications; 2007.

5. Merriam SB. Qualitative Research: A Guide to Design and Implementation. 3rd ed. Jossey-Bass; 2009.

6. Schreier M. Sampling and Generalization. In: Flick U, ed. The SAGE Handbook of Qualitative Data Collection. SAGE; 2018.

7. Moser A, Korstjens I. Series: Practical guidance to qualitative research. Part 3: Sampling, data collection and analysis. Eur J Gen Pract. 2018;24(1):9-18. doi:10.1080/13814788.2017.1375091

8. Hennink MM, Kaiser BN, Marconi VC. Code Saturation Versus Meaning Saturation: How Many Interviews Are Enough? Qual Health Res. 2017;27(4):591-608. doi:10.1177/1049732316665344

9. Jones SR, Carley S, Harrison M. An introduction to power and sample size estimation. Emergency Medicine Journal. 2003;20(5):453-458. doi:10.1136/emj.20.5.453

10. Critical Appraisal Skills Programme. CASP Checklist: 10 questionsto help you make sense of a Qualitative research. Published 2018. https://casp-uk.net/

11. Tong A, Sainsbury P, Craig J. Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups. Int J Qual Health Care. 2007;19(6):349-357. doi:10.1093/intqhc/mzm042

12. Guest G, Bunce A, Johnson L. How Many Interviews Are Enough?: An Experiment with Data Saturation and Variability. Field Methods. 2006;18(1):59-82. doi:10.1177/1525822X05279903

13. Glaser, B.G, Strauss, A. L. The Discovery of Grounded Theory: Strategies for Qualitative Research. Aldine; 1967.

14. Morse JM. The significance of saturation. Qualitative Health Research. 1995;5(2):147-149.

15. Bowen GA. Naturalistic inquiry and the saturation concept: a research note. Qualitative Research. 2008;8(1):137-152. doi:10.1177/1468794107085301

16. Caelli K, Ray L, Mill J. ‘Clear as Mud’: Toward Greater Clarity in Generic Qualitative Research. International Journal of Qualitative Methods. 2003;2(2):1-13. doi:10.1177/160940690300200201

17. Kerr C, Nixon A, Wild D. Assessing and demonstrating data saturation in qualitative inquiry supporting patient-reported outcomes research. Expert Rev Pharmacoecon Outcomes Res. 2010;10(3):269-281. doi:10.1586/erp.10.30

18. Varpio L, Ajjawi R, Monrouxe LV, O’Brien BC, Rees CE. Shedding the cobra effect: problematising thematic emergence, triangulation, saturation and member checking. Med Educ. 2017;51(1):40-50. doi:10.1111/medu.13124

19. Lincoln YS, Guba EG. Naturalistic Inquiry. Sage; 1985.

20. Dey I. Grounding Grounded Theory. Guidelines for Qualitative Inquiry. Academic Press; 1999.

21. Malterud K. Qualitative research: standards, challenges, and guidelines. The Lancet. 2001;358(9280):483-488. doi:10.1016/S0140-6736(01)05627-6

22. Sim J, Saunders B, Waterfield J, Kingstone T. Can sample size in qualitative research be determined a priori? International Journal of Social Research Methodology. 2018;21(5):619-634. doi:10.1080/13645579.2018.1454643